Creating a Development Environment for Spark Structured Streaming, Kafka, and Prometheus

— data-engineering, streaming-data, devops, docker — 4 min read

Docker-compose allows us to simulate pretty complex programming setups in our local environments. It is very fun to test some hard-to-maintain technologies such as Kafka and Spark using Docker-compose.

A few months ago, I created a demo application while using Spark Structured Streaming, Kafka, and Prometheus within the same Docker-compose file. One can extend this list with an additional Grafana service. The codebase was in Python and I was ingesting live Crypto-currency prices into Kafka and consuming those through Spark Structured Streaming. In this write-up instead of talking about the Watermarks and Sinking types in Spark Structured Streaming, I will be only talking about the Docker-compose and how I set up my development environment using Spark, Kafka, Prometheus, and a Zookeeper. To have the whole codebase for my demo project, please refer to the Github repository.

Service Blocks

In the Docker-compose, I needed the following services to keep my streaming data producer and consumer live, at the same time monitor the ingestions into Kafka:

- Spark standalone cluster: Consisting of one master and a worker code

- Spark-master

- Spark-worker

- Zookeeper: A requirement for Kafka (soon it will not be a requirement) to maintain the brokers and topics. For instance, if a broker joins or dies, Zookeeper informs the cluster.

- Kafka: A Message-oriented Middleware (MoM) for dealing with large streams of data. In this case, we have streams of crypto-currency prices.

- Prometheus-JMX-Exporter: An exporter to connect Java Management Extensions (JMX) and translate into the language that Prometheus can understand. Remembering the Kafka is an example of a Java application, this will be a magic service that enables us to scrape Kafka metrics automatically.

- Prometheus: A time-series database logging and alerting tool.

Spark Services

In the most basic setup for the standalone Spark cluster, we need one master and one worker node. You can use Docker-compose volumes for mounting folders. For Spark, perhaps the most common mounting reason is sharing the connectors (.jar files) or scripts.

For retrieving a Spark image from Docker Hub, as Big Data Europe has a very stable and extensive set of Spark Hadoop images, I preferred to use their images in my demo project. This prevented also some redundant work, like creating multiple Dockerfiles per Spark node.

I needed to take care of the Networking within the Docker-compose settings. Hence, I created a Bridge network with a custom naming as "crypto-network". The Bridge network enables us to run our standalone containers while communicating with each other. For more information about different network drivers in Docker containers, please refer to Docker documentation, very fun to read. While setting up I tried to give different forwarded host ports rather than using 8080 for the Web UI to prevent conflicts with JMX-Exporter. Besides, I wanted the worker nodes to be dependent on the master node to set up the order of container creations.

Lastly, following the BDE example, I override the SPARK_MASTER with environment variables. Here I am sharing the Spark component of the demo application.

1---2version: "3.2"3services:4

5 spark-master:6 image: bde2020/spark-master:2.2.2-hadoop2.77 container_name: spark-master8 networks:9 - crypto-network10 volumes:11 - ./connectors:/connectors12 - ./:/scripts/13 ports:14 - 8082:808015 - 7077:707716 environment:17 - INIT_DAEMON_STEP=false18

19 spark-worker-1:20 image: bde2020/spark-worker:2.2.2-hadoop2.721 container_name: spark-worker-122 networks:23 - crypto-network24 depends_on:25 - spark-master26 ports:27 - 8083:808128 environment:29 - "SPARK_MASTER=spark://spark-master:7077"30

31

32networks:33 crypto-network:34 driver: "bridge"You can start the services with:

1docker-compose upThen you can see the Spark master node setup with:

1docker exec -it spark-master bashKafka Services

To run Kafka in a standalone mode, I needed Zookeeper and Kafka itself with some fancy environment variables. Basically, Kafka needs to find the Zookeeper client port and it needs to advertise the correct ports to Spark applications.

To run this setting I used the Confluent images. Here, I am sharing the Kafka related services block. A Confluent image already allows us to set up:

- Kafka topics by using the environment variables:

KAFKA_CREATE_TOPICS: Topic names to be createdKAFKA_AUTO_CREATE_TOPICS_ENABLE: Self-explaining perhapsKAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: Self-explaining perhaps

- Connection to Zookeeper using the environment variable

KAFKA_ZOOKEEPER_CONNECT - With

KAFKA_BROKER_IDgiving a custom broker id for a particular node - Advertising the correct ports for the docker network internal services or external connections:

KAFKA_INTER_BROKER_LISTENER_NAME: Listener name for the setupKAFKA_LISTENER_SECURITY_PROTOCOL_MAP: Listener setup with mappingKAFKA_ADVERTISED_LISTENERS: Listener setup for internal and external networking. This is a bit tricky, so if I consume or produce any message in the internal Docker network, with the example below I need to connect tokafka:29092. From outside of Docker, I can use a consumer or producer vialocalhost:9092. For more information, here is an awesome explanation.

1---2version: "3.2"3services:4 zookeeper:5 image: confluentinc/cp-zookeeper6 container_name: zookeeper7 networks:8 - crypto-network9 environment:10 ZOOKEEPER_CLIENT_PORT: 218111 ZOOKEEPER_TICK_TIME: 200012

13 kafka:14 image: confluentinc/cp-kafka15 container_name: kafka16 depends_on:17 - zookeeper18 networks:19 - crypto-network20 ports:21 - 9092:909222 - 30001:3000123 environment:24 KAFKA_CREATE_TOPICS: crypto_raw,crypto_latest_trends,crypto_moving_average25 KAFKA_BROKER_ID: 126 KAFKA_ZOOKEEPER_CONNECT: zookeeper:218127 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT28 KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT29 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka:29092,PLAINTEXT_HOST://localhost:909230 KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"31 KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 132 KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 10033

34networks:35 crypto-network:36 driver: "bridge"Prometheus Services

In this project, I wanted to scrape Kafka's logs automatically. Hence, apart from the Prometheus service itself, I needed to also use the JMX-Exporter. And I realized that it is the coolest kid in a Docker-compose.

For both Prometheus and it JMX-Exporter, I needed to use custom Dockerfiles as they require some templates to be aware of each other. I used a separate ./tools/ folder to keep my monitoring related settings. And within the ./tools/prometheus-jmx-exporter, I had a confd folder to make use of and configure Docker containers at run-time. Here the file structure is as follows:

1.2├── prometheus3│ ├── Dockerfile4│ └── prometheus.yml5└── prometheus-jmx-exporter6 ├── Dockerfile7 ├── confd8 │ ├── conf.d9 │ │ ├── kafka.yml.toml10 │ │ └── start-jmx-scraper.sh.toml11 │ └── templates12 │ ├── kafka.yml.tmpl13 │ └── start-jmx-scraper.sh.tmpl14 └── entrypoint.shLet's start with the Prometheus image as it is more straightforward. We need to use a custom Dockerfile to get the config with custom scraper settings.

The Dockerfile will be:

1FROM prom/prometheus:v2.8.12

3ADD ./prometheus.yml /etc/prometheus/prometheus.yml4

5CMD [ "--config.file=/etc/prometheus/prometheus.yml","--web.enable-admin-api" ]And the prometheus.yml would be pointing the following, with a scrape interval of 5 seconds. In prometheus.yml, Prometheus targets a service called kafka-jmx-exporter with port 8080. Hence, in the Docker-compose, I should be using the same container name for JMX-Exporter as the targeted service.

1global:2 scrape_interval: 5s3 evaluation_interval: 5s4

5scrape_configs:6 - job_name: 'kafka'7 scrape_interval: 5s8 static_configs:9 - targets: ['kafka-jmx-exporter:8080']To create the JMX-Exporter image, I needed more tweaks. Let's start with the Dockerfile. The image for the JMX-Exporter uses a base image from Java. Then downloads from Maven repository JMX Prometheus .jar and writes to a file with the name /opt/jmx_prometheus_httpserver/jmx_prometheus_httpserver.jar. Next it downloads the Confd and stores in /usr/local/bin/confd, gives execute permissions. Lastly, it copies the entrypoint into /opt/entrypoint.sh.

1FROM java:82

3RUN mkdir /opt/jmx_prometheus_httpserver && wget 'https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_httpserver/0.11.0/jmx_prometheus_httpserver-0.11.0-jar-with-dependencies.jar' -O /opt/jmx_prometheus_httpserver/jmx_prometheus_httpserver.jar4

5ADD https://github.com/kelseyhightower/confd/releases/download/v0.16.0/confd-0.16.0-linux-amd64 /usr/local/bin/confd6COPY confd /etc/confd7RUN chmod +x /usr/local/bin/confd8

9COPY entrypoint.sh /opt/entrypoint.sh10ENTRYPOINT ["/opt/entrypoint.sh"]In the entrypoint.sh, I had only the execution of Confd, then running the start-jmx-scraper.sh. Hence, after the Confd sets up the source and destination files for both Kafka and JMX Scrapers with .toml, we run the downloaded jmx_prometheus_httpserver.jar file. The entrypoint.sh looks like this:

1#!/bin/bash2/usr/local/bin/confd -onetime -backend env3/opt/start-jmx-scraper.shAnd the start-jmx-scraper.shis as follows, the environment variables in Docker-compose define each of the key (JMX_PORT, JMX_HOST, HTTP_PORT, JMX_EXPORTER_CONFIG_FILE) mentioned in the command:

1#!/bin/bash2java \3 -Dcom.sun.management.jmxremote.ssl=false \4 -Djava.rmi.server.hostname={{ getv "/jmx/host" }} \5 -Dcom.sun.management.jmxremote.authenticate=false \6 -Dcom.sun.management.jmxremote.port={{ getv "/jmx/port" }} \7 -jar /opt/jmx_prometheus_httpserver/jmx_prometheus_httpserver.jar \8 {{ getv "/http/port" }} \9 /opt/jmx_prometheus_httpserver/{{ getv "/jmx/exporter/config/file" }}With the given custom Docker images for Prometheus automatically scraping Kafka, the full Docker-compose file for the demo project is as follows:

1---2version: "3.2"3services:4 zookeeper:5 image: confluentinc/cp-zookeeper6 container_name: zookeeper7 networks:8 - crypto-network9 environment:10 ZOOKEEPER_CLIENT_PORT: 218111 ZOOKEEPER_TICK_TIME: 200012

13 kafka:14 image: confluentinc/cp-kafka15 container_name: kafka16 depends_on:17 - zookeeper18 networks:19 - crypto-network20 ports:21 - 9092:909222 - 30001:3000123 environment:24 KAFKA_CREATE_TOPICS: crypto_raw,crypto_latest_trends,crypto_moving_average25 KAFKA_BROKER_ID: 126 KAFKA_ZOOKEEPER_CONNECT: zookeeper:218127 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT28 KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT29 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka:29092,PLAINTEXT_HOST://localhost:909230 KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"31 KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 132 KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 10033 KAFKA_JMX_PORT: 3000134 KAFKA_JMX_HOSTNAME: kafka35

36 kafka-jmx-exporter:37 build: ./tools/prometheus-jmx-exporter38 container_name: jmx-exporter39 ports:40 - 8080:808041 links:42 - kafka43 networks:44 - crypto-network45 environment:46 JMX_PORT: 3000147 JMX_HOST: kafka48 HTTP_PORT: 808049 JMX_EXPORTER_CONFIG_FILE: kafka.yml50

51 prometheus:52 build: ./tools/prometheus53 container_name: prometheus54 networks:55 - crypto-network56 ports:57 - 9090:909058

59 spark-master:60 image: bde2020/spark-master:2.2.2-hadoop2.761 container_name: spark-master62 networks:63 - crypto-network64 volumes:65 - ./connectors:/connectors66 - ./:/scripts/67 ports:68 - 8082:808069 - 7077:707770 environment:71 - INIT_DAEMON_STEP=setup_spark72

73 spark-worker-1:74 image: bde2020/spark-worker:2.2.2-hadoop2.775 container_name: spark-worker-176 networks:77 - crypto-network78 depends_on:79 - spark-master80 ports:81 - 8083:808182 environment:83 - "SPARK_MASTER=spark://spark-master:7077"84

85 producer:86 build:87 context: .88 dockerfile: ./Dockerfile.producer89 container_name: producer90 depends_on:91 - kafka92 networks:93 - crypto-network94

95networks:96 crypto-network:97 driver: "bridge"As the Docker-compose contains an additional Producer service when we run the following, we can test our Kafka topic messages per minute by checking the <IP_LOCAL>:9000:

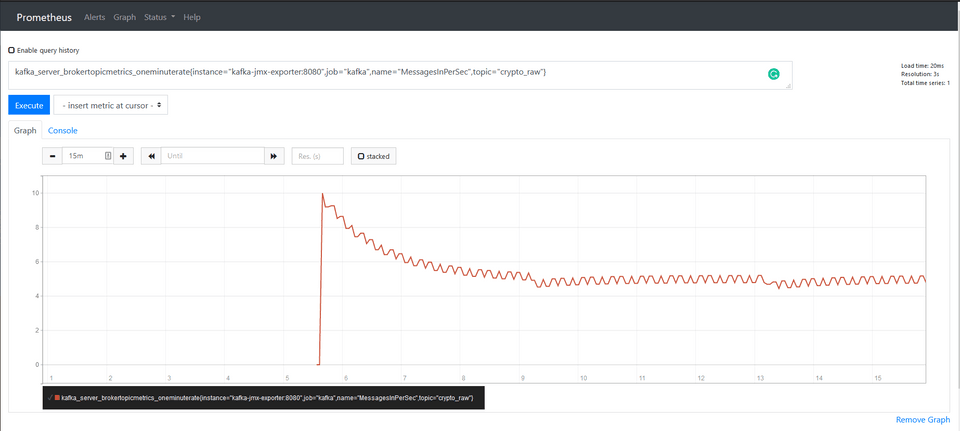

1docker-compose upHere the output of the Prometheus UI will be as follows:

Last Words

This was a demo project that I made for studying Watermarks and Windowing functions in Streaming Data Processing. Therefore I needed to create a custom producer for Kafka, and consume those using Spark Structured Streaming. Although the development phase of the project was super fun, I also enjoyed creating this pretty long Docker-compose example.

In case more detail is needed, I am sharing the Github repository.