Windowing in Streaming Data; Theory and a Scikit-Multiflow Example

— python, data-science, streaming-data, windowing — 3 min read

With the streaming data, it is almost impossible to store sufficient statistics all over the examples, because of the memory constraints. Moreover, the analysts in the information systems departments are usually more interested in the recent changes in the databases. With time, the properties of the datasets might change and the older data may not give the most important information for a statistical learning algorithm. The changes in the properties of data over time is called concept drift. To increase memory efficiency and overcome concept drift, windowing mechanisms are developed [1].

In this short review, I want to present four commonly known examples of windowing algorithms on a theoretical level. Those include from simplest to the most complex: Landmark, Sliding, Time-Fading, and Adaptive Sliding.

For the applied level, scikit-multiflow [2] provides a nice complementary for scikit-learn with streaming analytics algorithms. I applied ADWIN for detecting concept drift, out of my enthusiasm on the library and the algorithm. I will be sharing this.

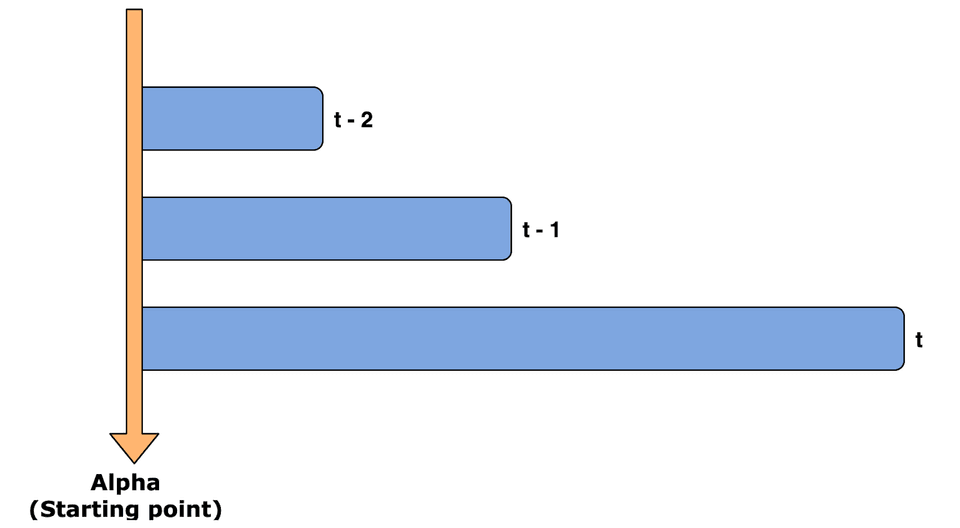

Landmark

Landmark contains the whole historical data, starting from a landmark point. In the example chart, the alpha point is chosen as a landmark. A system, following the landmark mechanism, is going to include all the data from the alpha point. Landmark mechanism is used when periodic results are needed for the statistical analysis (yearly, monthly, quarterly).

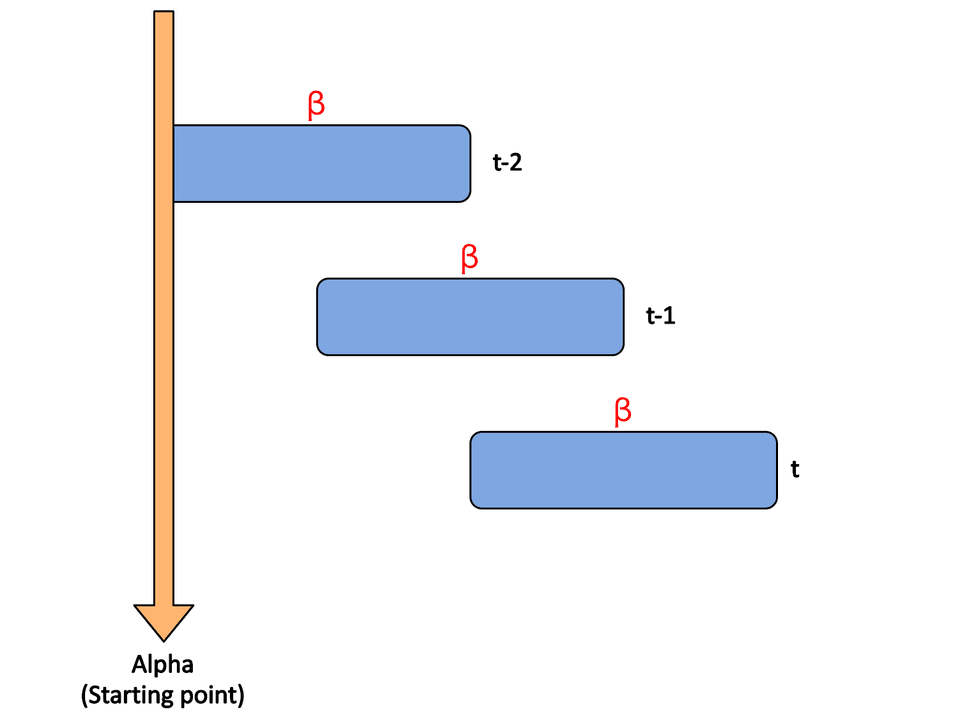

Sliding Window

Sliding Window contains the data belonging to the time interval with fixed recency (ꞵ) and binary weighting. ꞵ defines the range of the window length. Hence, the starting point (timestamp) is calculated by subtracting the ꞵ from the current timestamp (t). This requires in each iteration, the older data to be deleted. A sliding window is used when the exact number of data points is critical for the statistical analysis, e.g., traffic monitoring, topic extraction on a news portal [3].

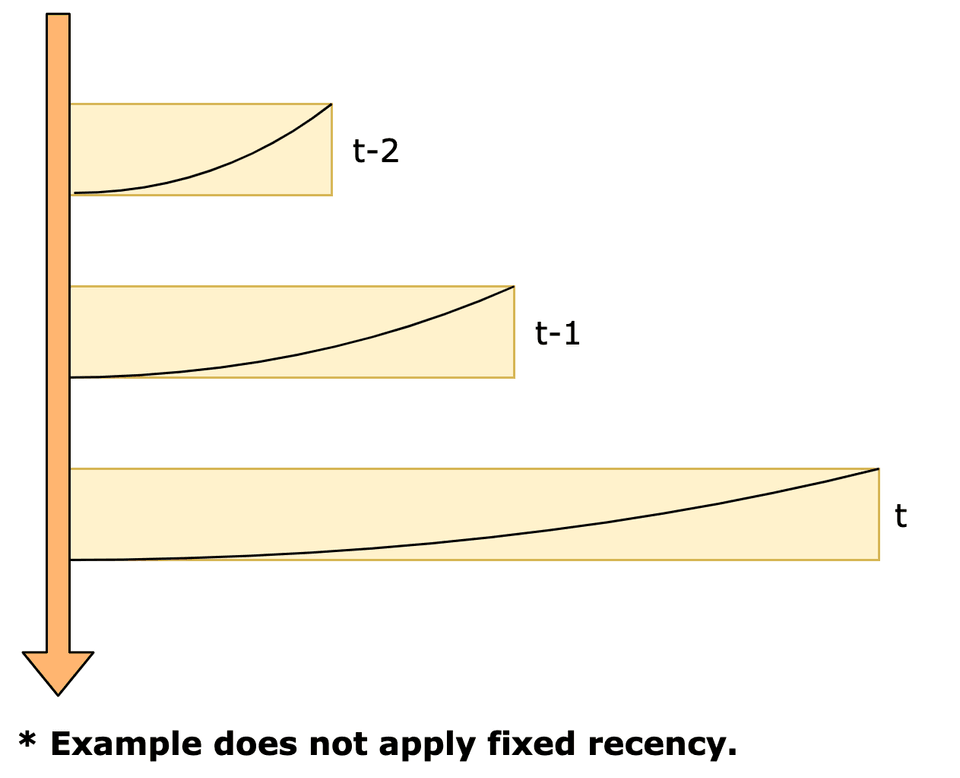

Time-Fading Window

A time-fading window is also named as a damped window. It contains the data belonging to the time frame with fixed recency (ꞵ) and a linear or exponential decaying factor (f). The continuous decaying logic takes the recency into account and emphasizes more the recent data less the older data with a certain threshold.

The time-fading window method can be considered as a special case of a sliding window or landmark method, depending on how the recency is defined.

Adaptive Sliding Window (ADWIN)

ADWIN adjusts the mean values of the objects and keeps those below a threshold level (epsilon). If the mean values significantly deviate from a threshold, it deletes the corresponding old part. It is adaptive to the changing data. For instance, if the change is taking place the window size will shrink automatically, else if the data is stationary the window size will grow to improve the accuracy.

A better visualization is presented in Albert Bifet's (author of the Adaptive Stream Mining: Pattern Learning and Mining from Evolving Data Streams) personal website.

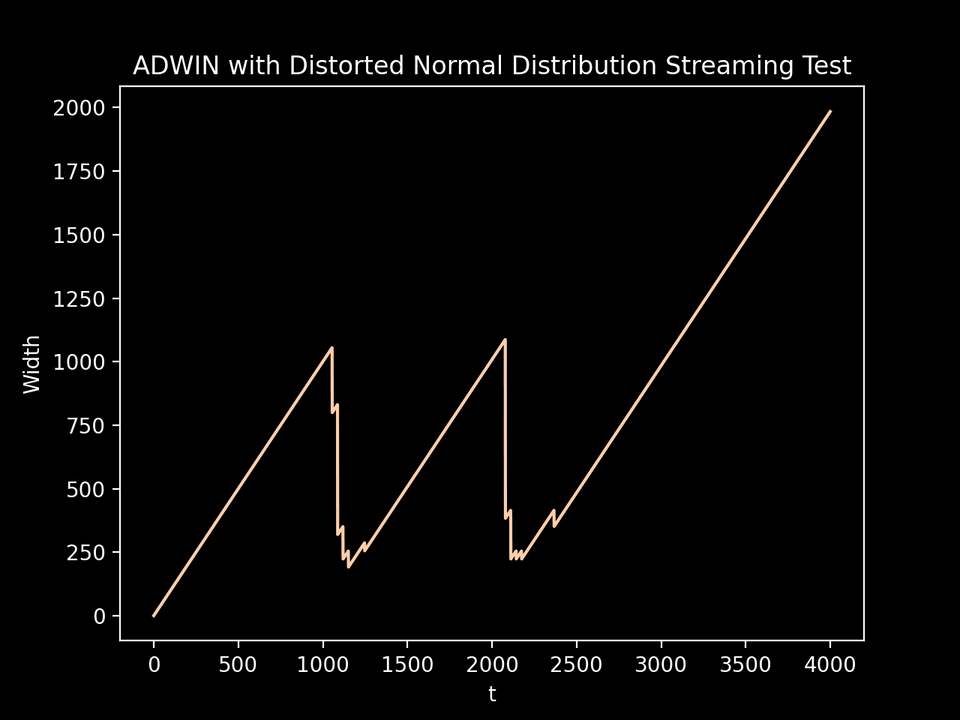

The intuition behind using ADWIN is to keep statistics from a window of variable size while detecting concept drift. By using the scikit-multiflow library I simulated a distorted data stream with a normal distribution.

The code below is used for catching the concept drift in the normal distribution (with a mean of 0 and a standard deviation of 0.25). I changed the stream values with the indices between 1000 and 2000 with a different normal distribution (with a mean of 1 and a standard deviation of 0.5). Hence, I expected a width change (decrease) between the stream values 1000 till 2000 and an increase in width till the end of the stream.

1import numpy as np2

3from skmultiflow.drift_detection.adwin import ADWIN4

5adwin = ADWIN(delta=0.0002)6SEED = np.random.seed(42)7

8# Simulating a data stream as a normal distribution of 1's and 0's9mu, sigma = 0, 0.25 # mean and standard deviation10data_stream = np.random.normal(mu, sigma, 4000)11

12# Changing the data concept from index 1000 to 200013mu_broken, sigma_broken = 1, 0.514data_stream[1000:2000] = np.random.normal(mu_broken, sigma_broken, 1000)15

16width_vs_variance = []17

18# Adding stream elements to ADWIN and verifying if drift occurred19for idx in range(4000):20

21 adwin.add_element(data_stream[idx])22

23 if adwin.detected_change():24 print(f"Change in index {idx} for stream value {data_stream[idx]}")25

26 width_vs_variance.append((adwin.width, adwin.variance, idx))The output of this small test for observing the width changes with detected concept drift in our streaming data is displayed in the chart below. As expected, we can observe a zigzag (return back) between the indices (t) 1000 and 2000, then the width grows till the end of the stream.

References

[1] Gama, J., Sebastião, R. & Rodrigues, P.P. On evaluating stream learning algorithms. Mach Learn 90, 317–346 (2013).

[2] Montiel, J., Read, J., Bifet, A., & Abdessalem, T. (2018). Scikit-multiflow: A multi-output streaming framework. The Journal of Machine Learning Research, 19(72):1−5.

[3] Youn, J., Choi, J., Shim, J., & Lee, S. (2017). Partition-Based Clustering with Sliding Windows for Data Streams. Lecture Notes in Computer Science, 289–303.